Table of Contents

Key Takeaways:

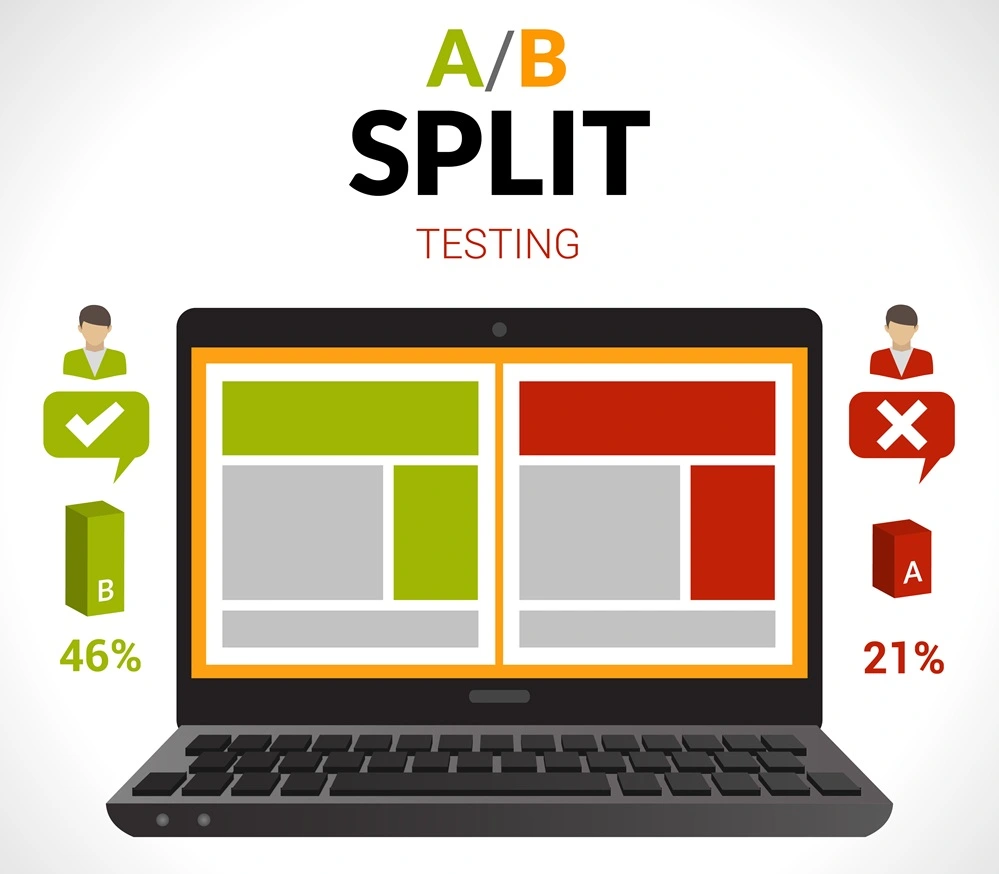

- A/B testing (also known as split testing) helps you compare two versions of an ad or landing page to see which performs better.

- To succeed with A/B testing: define clear goals and KPIs; change only one variable at a time; run the test for enough time and get sufficient traffic.

- Focus your tests on the highest‑impact elements like headlines, calls‑to‑action, creative visuals, and audience segments.

- Avoid common mistakes: running the test too short, testing multiple variables at once, not having statistical significance.

Introduction

In the world of digital marketing, your ad campaigns generate data, but how often do you act on it? If you’re running ads on platforms like Meta (Facebook/Instagram), Google Ads, LinkedIn, or native display networks in India (or globally), you’ll want to continuously improve performance: better click‑through rates (CTR), higher conversions, lower cost‑per‑acquisition (CPA), and improved return on ad spend (ROAS).

This is where A/B testing becomes your secret weapon. Instead of guessing what might work, you test, measure, and then implement the version that wins. Whether you’re an aspiring digital marketer just getting started, or a working professional looking to optimise campaigns, this blog will walk you through how to use A/B testing effectively, step by step, with practical tips.

Join Our Online Digital Marketing Course & Learn the Fundamentals!

What is A/B Testing & Why It Matters

1: What is the primary goal of SEO (Search Engine Optimization)?

At its core: you show Version A (the control) to a portion of your audience and Version B (the variant) to another portion. You then compare which version performs better against your key metric (conversion rate, sign‑ups, click‑throughs etc.).

Why this matters for ad performance

- It replaces guesswork with evidence. As one source puts it: “The fundamental value proposition of A/B testing is its ability to replace subjective decision‑making with objective, quantitative data.”

- You identify what resonates with your audience, not just what seems logically better.

- You optimise budget by pushing the winners, and stopping the underperformers.

- Over time this builds a culture of experimentation and continuous improvement.

Become an AI-powered Digital Marketing Expert

Master AI-Driven Digital Marketing: Learn Core Skills and Tools to Lead the Industry!

Explore CourseGetting Started: Define Your Goals, Audience & Hypothesis

Step A: Define Your Goal

Start by asking: What do I want to improve? For example:

- Increase ad click‑through rate by 20% in India for a mobile app install campaign.

- Decrease cost per acquisition (CPA) for leads in the Indian market to ₹ 300 or less.

- Improve ROAS from 3× to 5× for e‑commerce ads in INR.

Step B: Identify Audience Segments

Decide who you will test on:

- Age groups, gender, geography (Kerala vs India vs South Asia)

- Platforms (mobile vs desktop)

- New vs returning users

Segmenting helps you test relevant messages.

Step C: Formulate Your Hypothesis

A hypothesis might be: “If I change the CTA from ‘Buy Now’ to ‘Shop Now – Free Delivery’ then conversions will increase by at least 15 %.”

Why hypothesis? Because testing without one is random and wastes budget.

Step D: Choose Your KPI

Selecting the right key performance indicator (KPI) is vital:

- For ads: CTR, CPC, conversions, CPA, ROAS.

- For landing pages: conversion rate, bounce rate.

- For email/SMS: open rate, click rate.

Sources emphasise: define your KPIs before you begin.

Decide What to Test (And What Not to Test)

High‑Impact Elements You Can Test

Here are typical ad or landing page elements you could test:

- Headline or primary message

- Visual creative (image vs video, colour, layout)

- Call‑to‑action (CTA) copy, placement, colour

- Audience targeting (segment A vs segment B)

- Offer or value proposition (e.g., “Free Shipping in India” vs “10 % off first purchase”)

- Landing page layout or form length

The source from Adobe highlights many of these: “Split test your copy, page layout, navigation, CTA…”

What to avoid or postpone

- Testing multiple variables at once (e.g., changing headline and visual and CTA) makes it hard to isolate what caused the difference.

- Testing when traffic is too low, results won’t be statistically significant.

- Rushing to implement after a small sample size.

Design & Launch Your Test

Step 1: Setup Control & Variant

- Version A = existing ad or landing page.

- Version B = the modified version with one element changed.

Ensure everything else is held constant: same audience, same budget, same duration.

Step 2: Split Traffic

Allocate traffic evenly (50/50) or in a way that makes sense (60/40) depending on your risk appetite. The key is fairness.

Step 3: Choose Test Duration & Sample Size

You must run the test long enough and with enough traffic to achieve statistical significance. A common rule: minimum one week (if traffic allows) and enough conversions to make conclusions reliable.

Step 4: Launch the Test

Use your ad platform’s split‑testing functionality (e.g., Facebook A/B test, Google Ads Experiments) or landing‑page tool. Make sure you tag UTM parameters if needed so you can track in analytics (Google Analytics/GA4).

Step 5: Monitor but Don’t Interfere

Avoid pausing or altering the test mid‑course. Let it run its duration so results are valid.

Become an AI-powered Digital Marketing Expert

Master AI-Driven Digital Marketing: Learn Core Skills and Tools to Lead the Industry!

Explore CourseAnalyse Results & Make Decisions

Step 1: Review the Data

Compare the performance of A vs B across your chosen KPI(s). Also look at secondary metrics (CTR → conversions → cost per conversion).

Step 2: Determine Winner

If Variant B significantly outperforms Variant A for your target KPI (at the desired confidence level), you’ve found the winner. Some platforms will mark it with “95 % confidence”.

Step 3: Implement & Roll‑out

Once a winner is selected:

- Pause the losing version.

- Roll out the winning version to full traffic.

- Document results: lift achieved (%), impact on cost/ROI.

Step 4: Learn & Iterate

- Ask why the winner won: What element made the difference?

- Use the learning to formulate the next hypothesis. A/B testing is a continuous cycle.

- Maintain a “Test log” or “Experiment database” with each hypothesis, result, lift (especially useful for agencies or freelancers).

Common Mistakes & How to Avoid Them

Mistake 1: Changing multiple variables in one test

Solution: Always test one element at a time so you know cause and effect.

Mistake 2: Running test too short or with few conversions

Solution: Use sample‑size calculators; ensure your test has meaningful duration and conversions.

Mistake 3: Not defining baseline and KPI upfront

Solution: Record current metrics (baseline) and clearly define what success looks like.

Mistake 4: Ignoring segmentation

Solution: Consider doing your test for the right segment (location, device type, age group) rather than the whole audience.

Mistake 5: Not documenting / not iterating

Solution: Maintain a test log; review and refine continuously. When you skip documenting, you lose institutional knowledge.

For marketers working with limited budgets or traffic, these mistakes are especially costly, so setting up a systematic testing process helps you compete more efficiently.

Real‑Life Example: Running A/B Tests for Campaigns

Imagine you are handling a social‑media ad campaign for a Kerala‑based e‑commerce brand selling customised sarees. Here’s how you might apply A/B testing:

Goal: Increase purchases via Facebook/Instagram ads by 15 % and reduce CPA to ₹ 350 or less.

Hypothesis: Changing the CTA from “Shop Now” to “Claim Your Free Shipping Today” will improve conversions because Indian customers respond to free‑shipping incentives.

Audience segment: Women, age 22‑40, based in Kerala and Tamil Nadu, mobile‑only users.

Versions:

- Version A (Control): Image of saree with headline “Latest Collection – Order Now” + CTA “Shop Now”.

- Version B (Variant): Same image, but headline “Free Shipping Across Kerala – Limited Time” + CTA “Claim Free Shipping”.

Traffic split: 50/50.

Duration: 14 days (to collect sufficient conversions).

KPI: Conversion rate (sale completion) + CPA.

Result: Suppose Version B delivers a 20 % higher conversion rate and lowers CPA to ₹ 320. Winner: Version B.

Next step: Roll out Version B full budget; maybe next test: test image vs video, or test Malayalam headline vs English.

This iterative approach helps you gradually optimise each campaign incrementally with data, not guesswork.

How the Entri App Helps You Master A/B Testing

If you’re an aspirant, freelancer, or marketing professional, ready to elevate your skills, the Entri App’s AI‑powered Digital Marketing Course is a strong fit. Here’s how it complements the topic:

- Analytics & Experimentation Module: Covers how to set up experiments, use tools, interpret results, and scale tests.

- Campaign Optimisation Section: Teaches you how to apply A/B testing within ad platforms (Google/Meta etc) with India‑specific case studies.

- Hands‑on Practice: You’ll work through exercises that replicate real‑world scenarios, defining hypotheses, running tests, analysing results.

- AI Tools & Automation: The course introduces emerging AI‑based testing tools that can accelerate your test cycles (important when budgets are tight or competition is intense).

- Localised Context: Indian market focus – you’ll learn how to align testing with Indian consumer behaviour, mobile devices, local festivals, regional languages, INR cost models.

- Certification & Portfolio: Having this course under your belt helps you demonstrate to clients/employers you are capable of data‑driven marketing, not just “set and forget” campaigns.

If you’re serious about moving from managing campaigns to mastering optimisation and testing, enrolling in Entri’s course can give you the structure, skills, and confidence to run impactful A/B experiments.

A Practical Checklist for Your Next A/B Test

Here’s a handy checklist you can save / print and use before your next test:

- Define campaign goal (e.g., increase conversions by 15 %).

- Document baseline metrics (current CPA, conversion rate, CTR etc).

- Formulate a hypothesis (what you will change and why).

- Select one variable to test (headline / CTA / image/audience).

- Create Control (A) and Variant (B).

- Set target audience & segmentation (location, device, age, language).

- Split traffic (50/50 or as agreed) & set test duration (minimum a week or until sample size achieved).

- Monitor but don’t interfere mid‑test (avoid making changes).

- Analyse results: Which version met/beat KPI? Was the difference statistically significant?

- Roll out winner, document learnings, plan next test.

- Update test log: hypothesis, result (lift %, cost impact), next test idea.

- Repeat the cycle, continuous optimisation.

Conclusion

A/B testing is one of the most powerful levers you have to improve your ad performance, whether you’re a beginner in digital marketing or a seasoned professional. By defining clear goals, testing one element at a time, analysing results, and iterating consistently, you move from guesswork to data‑driven optimisation.

For contexts, especially with mobile‑first users, price sensitivity, regional diversity, and increasing competition, the edge you gain by running systematic tests can be the difference between average and outstanding campaigns.

If you’re ready to go beyond just “setting ads” and move into “optimising ads intelligently”, consider the Entri App’s AI‑powered Digital Marketing Course. It extends the lessons here, gives you structured learning, hands‑on practice and tools to truly master performance marketing.

Now’s the time: pick your next campaign, draft a hypothesis, set up your A/B test and let the data guide your way to better results. Happy testing!

Frequently Asked Questions

How long should I run an A/B test?

You should run until you have enough data for statistical significance. Many sources suggest at least one week, but if traffic is low you may need more time.

Can I test more than one element at the same time?

Technically yes, but it’s not recommended for a clear result, you’ll then not know which change caused the difference. Stick to one variable per test for clarity.

What if my traffic or conversions are too low to reach significance?

In that case:

- Extend the test duration.

- Combine traffic sources or increase budget to get more data.

- Choose bigger changes (that yield bigger effect) so lifts are more obvious.

Also, you may test micro‑elements to gradually build.

Should I always use 50/50 traffic split?

50/50 is clean but you can use other splits (e.g., 40/60) if you are cautious with budget. The key is that assignment is random and both groups are comparable.

Can I test ads across different platforms (Meta vs Google) with A/B tests?

Yes, but be careful: each platform has its unique audience behaviour, bidding algorithms and metrics. If you test across platforms, treat each platform as its own experiment or have matched audience criteria for fair comparison.

What metrics should I pick for market campaigns?

Typical metrics: CTR, CPC (₹), conversion rate, CPA (₹), ROAS. Also consider device type (mobile), region (state‑wise), language segments. Choose the metric aligned with your campaign goal.

How many tests should I run per month?

There’s no fixed number, but aim for continuous testing. If you completed one test and have results, plan the next one immediately. The culture of experimentation matters more than sheer volume.

Does A/B testing guarantee big results?

No guarantee. Some tests may show small lifts or none at all. The idea is to learn, refine and improve over time. Many sources emphasise that only a subset of tests produce significant results.

Will Entri’s Course teach me A/B testing tools?

Yes, the Entri App’s AI‑powered Digital Marketing Course covers analytics, testing frameworks, hands‑on projects (including A/B testing) and tools. This helps you apply the concepts you read about here, understanding why you test and how you interpret results in real campaigns.